There is a lot of talk about AI, LLMs, GPTS, GEMs, Agents, etc. In between learning and exploring about these fast growing and changing technologies, we also have to eat!

In our family, we started liking sourdough bread. We are interested in baking our own bread too. Ovens, baking, and good quality are challenging for us. So we kept postponing the baking of our own sourdough bread.

Recently we got a sourdough starter kit and we took the plunge. I also decided to enlisted some of the latest AI technologies to help us.

My prompt for the AI engines is:

Prompt: Make a sequence diagram for baking sourdough bread

I checked this out against 3-4 LLM engines. I am glad to receive the clear and simple outputs I received including mermaid diagrams. I am pleasantly surprised that a locally hosted LLM model was producing the best results.

Gemini.Google and ChatGPT

Trying the prompt on ready to use chat experience, yielded straight forward sequence diagram in mermaid script form.

sequenceDiagram

participant Baker

participant Starter

participant Dough

participant Oven

Baker->>Starter: Feed starter

Starter–>>Baker: Starter is ready

Baker->>Dough: Mix flour, water, salt, and starter

Dough–>>Baker: Dough is formed

Baker->>Dough: Bulk fermentation (several hours)

loop Stretch and Fold (Every 30 mins)

Baker->>Dough: Stretch and fold

end

Dough–>>Baker: Dough is fermented

Baker->>Dough: Shape the dough

Baker->>Dough: Final proof (overnight)

Dough–>>Baker: Dough is proofed

Baker->>Oven: Preheat oven

Baker->>Dough: Score the dough

Baker->>Oven: Bake the bread

Oven–>>Baker: Bread is baked

Baker->>Baker: Enjoy fresh sourdough!

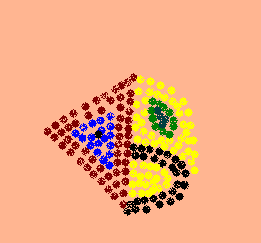

Translated into mermaid diagram, we get this

Locally Hosted LLM

Most of us like the idea of not sharing much data. So why not use a locally hosted LLM. I had set up a self-hosted AI with LLMs (SHAIL) on my Mac Silicon machine. I landed up the open sourced DeepSeek-R1:14b model to run within open-webui. And it performed really well. Besides getting a sequence diagram, it also showed me the thinking process and the diagram script. And all these happened with a minor penalty on generation time. My local machine has limited RAM and limited GPUs in it right now. So I am not surprised at the performance penalty.

Here is the output sequence diagram generated by the local run.

In conclusion,

it is incredible that the locally hosted version generated mermaid diagrams and simple steps. Now, it is time for me to make the sourdough bread and see the results.

PS: we already created starter 2 days back. we fermented yesterday night; we will bake today

Leave a Reply